I’m currently using Munin to keep an eye on the various services running on my main server at UCL CASA. Munin is a monitoring package. It is simple to install (on Ubuntu, sudo apt-get install munin on your primary server, sudo apt-get install munin-node on all servers you want to monitor), and brilliantly simple to set up and – most importantly – extend.

Out of the box, Munin will start monitoring and graphing various aspects of your server, such as CPU usage, memory usage, disk space and uptime. The key is that everything is graphed, so that trends can be spotted and action taken before it’s too late. Munin always collects its data every five minutes, and always presents the graphs in four timescales: the last 24 hours, the last 7 days, the last month and the last year.

Extending Munin to measure your own service or process is quite straightforward. All you need is a shell-executable script which returns key/value pairs representing the metric you want to measure. You also need to add a special response for when Munin wants to configure your plugin. This response sets graph titles, information on what you are measuring, and optionally thresholds for tripping alerts.

Here’s a typical response from a munin script “uptime”, this is used by Munin to construct the graph:

uptime.value 9.29

Here’s what you get when you call it with the config parameter:

graph_title Uptime

graph_args --base 1000 -l 0

graph_scale no

graph_vlabel uptime in days

graph_category system

uptime.label uptime

uptime.draw AREA

Munin takes this and draws (or reconfigures) the trend graph, with the data and a historical record. Here’s the daily graph for that:

I have two custom processes being monitored with Munin. The first is my minute-by-minute synchronisation of my database (used by OpenOrienteeringMap and, soon hopefully*, GEMMA) with the UK portion of the master OpenStreetMap database. The metric being measured is the time lag. Normally this is around a minute or less, but if there are problems with the feed at either OSM’s end or (more likely) my end, the graph spikes up and the problem can be spotted and dealt with. Also, the subsequent graphing trend, after such an issue, is useful for predicting how quickly things will be back to normal. I’m using an OSM plugin (part of the Osmosis system, which is also doing the synchronisation) rather than writing my own.

The other process is for monitoring the various feeds I have to around 50 cities around the world, to get their current bikes/spaces information for my Bike Share Map. Munin graphs are useful for spotting feeds that temporarily fail, and then hopefully fix themselves, resulting in distinctive “shark fin” trendlines. If one feed doesn’t fix itself, its shark fin will get huge and I will then probably go and have a look. Above is what the daily graph looks like.

I wrote this plugin myself, in two stages. First, my scripts themselves “touch” a “heartbeat” file (one for each script) upon successful execution. When the feed’s file is touched, its modified timestamp updates, this can then be used as a basis for determining how long ago the last successful operation is.

Secondly, my Munin plugin, every five minutes, scans through the folder of heartbeat files (new ones may occasionally appear – they go automatically onto the graph which is nice) and extracts the name and modified timestamp for each file, and reports this back to Munin, which then updates the graphs.

Because Munin allows any shell-executable script, I was able to use my language du-jour, Python, to write the plugin.

Here it is – latest_dir is an absolute path to the parent of the heartbeats directory, scheme is the name of the city concerned:

#!/usr/bin/python

import os

import time

import sys

filedir = latest_dir + '/heartbeats/'

files = os.listdir(filedir)

files.sort()

if len(sys.argv) > 1:

if sys.argv[1] == 'config':

print "graph_title Bike Data Monitoring"

print "graph_vlabel seconds"

for f in files:

fp = f[:-6]

print fp + ".label " + fp

exit()

for f in files:

tdiff = time.time() - os.path.getmtime(filedir + f)

fp = f[:-6]

print fp + ".value " + str(tdiff)

The middle section is for the config, the bit that is used every five minutes is just the gathering of the list of files at the top, and the simple measurement at the bottom.

That is really it – there is no other custom code that is producing the graphs like the one at the top of this post – all the colours, historical result storing and HTML production is handled internally in Munin.

My code that updates the heartbeat file is even simpler:

def heartbeat(scheme):

open(latest_dir + '/heartbeats/' + scheme + '.touch', 'w').close()

Fingers crossed things won’t go wrong, but if they do, I now have a pleasant, graphical interface to spotting them.

* GEMMA will use OpenStreetMap data for the whole world, but currently it takes longer than a minute to process a minute’s worth of OpenStreetMap database updates, such is the level of activity in the project at the moment, so my minutely-updating database only covers the UK. So, for GEMMA, I am just using a “static” copy of the whole world. OpenOrienteeringMap has two modes, UK and Global – only the UK one uses the updating database.

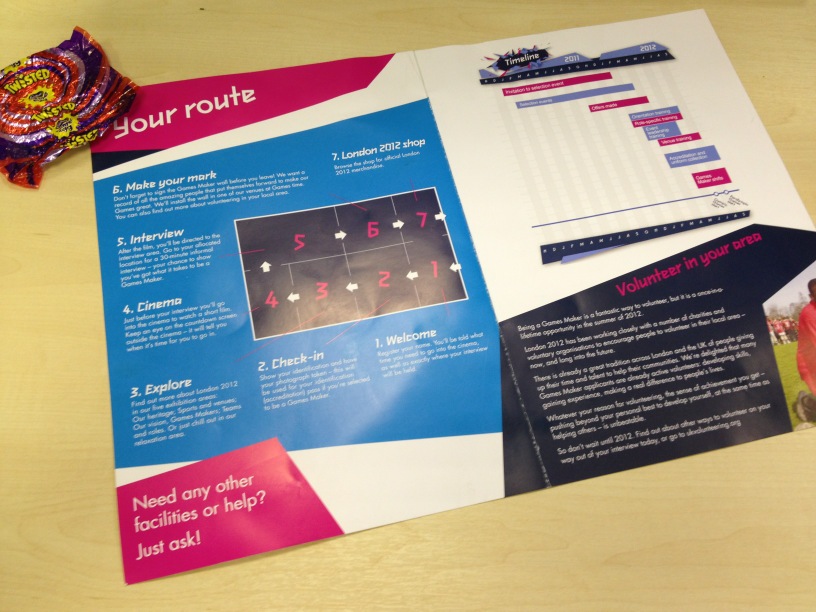

The interviews were on the 19th floor of a skyscraper in Canary Wharf – chosen presumably because of the stunning view north to the Olympic Park, which is looking encouragingly complete these days. I guess some interviewees have found the drop from the 19th floor a bit much, because we were asked if we wanted an interview “pod” away from the windows…

The interviews were on the 19th floor of a skyscraper in Canary Wharf – chosen presumably because of the stunning view north to the Olympic Park, which is looking encouragingly complete these days. I guess some interviewees have found the drop from the 19th floor a bit much, because we were asked if we wanted an interview “pod” away from the windows…